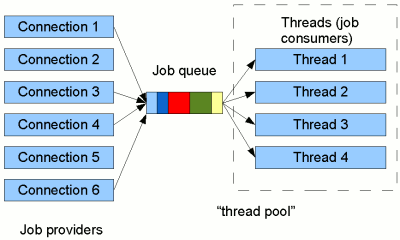

Figure 1: Typical thread pool architecture.

Thread pools in Java

In our previous discussion of how threads work,

we discussed the overhead of thread creation

and the fact that there was some moderate limit on the number of simultaneous threads that

we could create. When we know that our application will continually receive short-term

tasks to execute in parallel, a thread pool alleviates these shortcomings.

The basic idea is as follows:

- whenever we want a job performing, instead of creating a thread for it directly,

we put it on to a queue;

- a (fairly) fixed set of long-running threads each sit in a loop pulling jobs off the queue

and running them.

The overall scheme is shown in Figure 1. It's arguable whether we see the job queue

is an internal part of the thread pool or as a separate unit as illustrated.

We show it separately in Figure 1 simply because it's

something that we can configure (e.g. to proioritise jobs). But in reality the pool and queue

can be quite tightly coupled: for example, in Sun's

implementation of ThreadPoolExecutor, the queue is completely bypassed when there are spare

threads waiting for a job.

Aside from that argument, the diagram shows a classic

scenario where the "jobs" are incoming connections to a server that need servicing (in this

case, the threads are sometimes called "worker threads"). Indeed, if you're using a bog-standard

application server or a Servlet runner (such as Tomcat),

your application is very probably running in a thread pool architecture without

you necessarily realising it.