Home

Home

Home Home

|

|

We look here are how the binary number system applies to computers.

A binary digit is usually referred to as a bit, either as a value, or as a unit of measurement. So, a binary number that is 8 digits in length can be referred to as an 8-bit number.

The most fundamental size of number after the bit is generally the byte. A byte is usually considered to be the most fundamental unit of memory storage. Put another way: the computer's memory is made up of a large array of bytes; the minimum number size that the computer reads or writes at a time is a byte, which it reads or writes to a given "location" in memory. Files on a disk are also essentially seen as a series of bytes: we never (and generally can't) ask the computer for the nth bit of a file, but can ask for the nth byte (or for a given section of bytes). How individual bits are physically arranged into bytes on the given media is left up to the hardware.

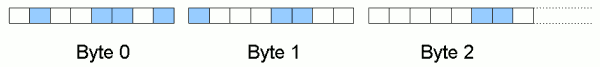

In practice, a byte is 8 bits in modern computers. Eight binary digits means 256 distinct numbers: so a byte generally represents a number in the range 0–255 (or possibly another range such as -128–127 when we need to allow negative numbers). The contents of memory (or of a file) can thus be envisaged as follows, where each group of 8 boxes represents a single bytes. When we read or write to memory, we'll generally "grab hold of" a whole group of 8 boxes together, and manipulate them together as one binary number.

You may be wondering why bytes consist of exactly 8 bits rather than some other grouping. Well, largely for historical reasons. After various sizes in early computers, 8 bits emerged as the convenient size in various common processors1 (such as the Intel 8080 and ubiquitous Zilog Z80) used in early microcomputers and other electronic devices. It's probably fair to say that by around 1980, eight bits was fairly well established as the size of a byte. And since then, it has remained "convenient enough" as the basic unit of memory:

As we just mentioned in the last point, bytes are commonly combined into larger units, sometimes called words. On the next page, we look at combining bytes into larger units.

1. Generally, a size has to be chosen to be convenient for both data

and machine instructions. 8 bits (256 values) is enough to accommodate

common characters in English and some other languages. Designers of 8-bit processors

presumably found that being able to encode 256 common instructions as one byte

was a "reasonable tradeoff". And at the time, 8 bits was also

generally enough to encode other things such as a pixel colour or screen

coordinate. Having a byte size that

is a power of 2 may also have been felt to be a "neater" design.

It is interesting to note that, for example, Marxer, E. (1974), Elements of

Data Processing, describes a byte as being either 6-bit and 8-bit depending

on whether the computer was of the "octal" or "hexadecimal" type.

2. It's interesting to note how, as memory capacity of computers and storage media increases,

the tradeoff between data quality and what's a "convenient" number of bytes can change.

At one time, 16-bit recordings (as in standard CD format) were considered

the "holy grail" of digital recording; now, 24-bit audio sampling is becoming fairly

common, at least in professional recording and mastering processes.

Written by Neil Coffey. Copyright © Javamex UK 2008. All rights reserved.